MI300X FP8 Data‑Parallel Benchmarks (8–64 GPUs): H200 Left Behind, B200 Within Reach

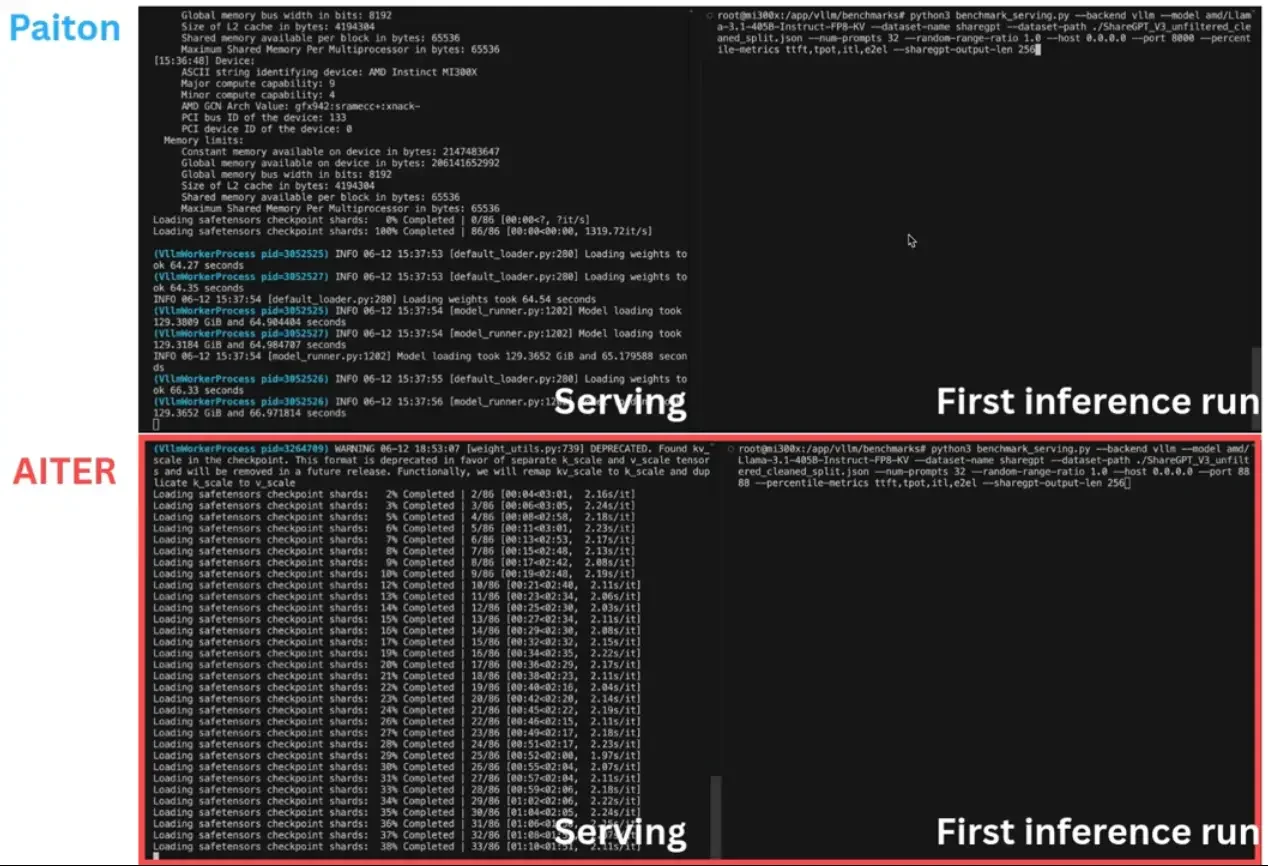

At ElioVP, we’re all about pushing AI inference past the limits, and packaging every squeeze of performance into a plug‑and‑play…

At ElioVP, we’re all about pushing AI inference past the limits, and packaging every squeeze of performance into a plug‑and‑play…

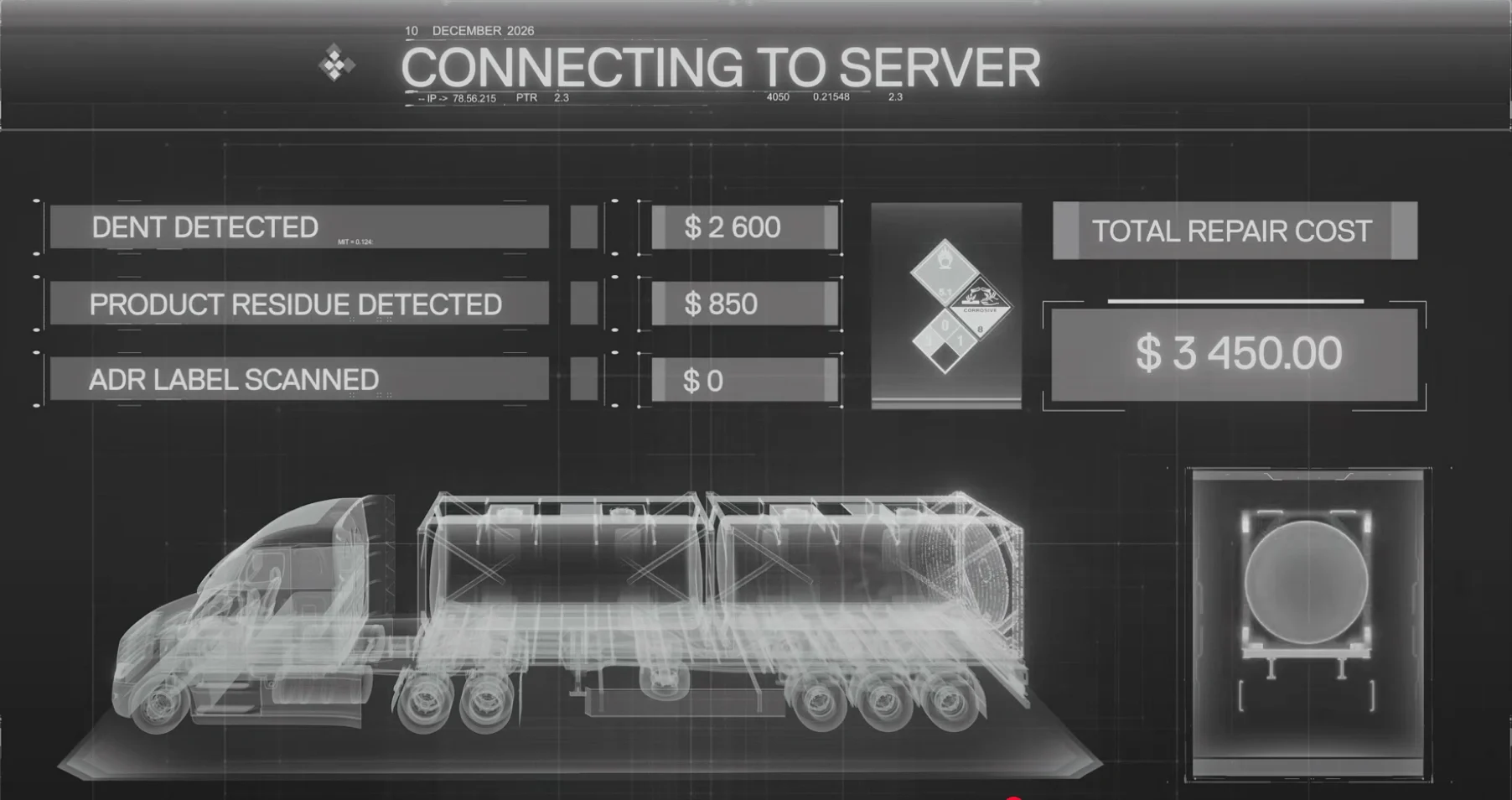

Here at Eliovp, we continuously innovate when it comes to building practical solutions. If there's one core strength, it’s our…

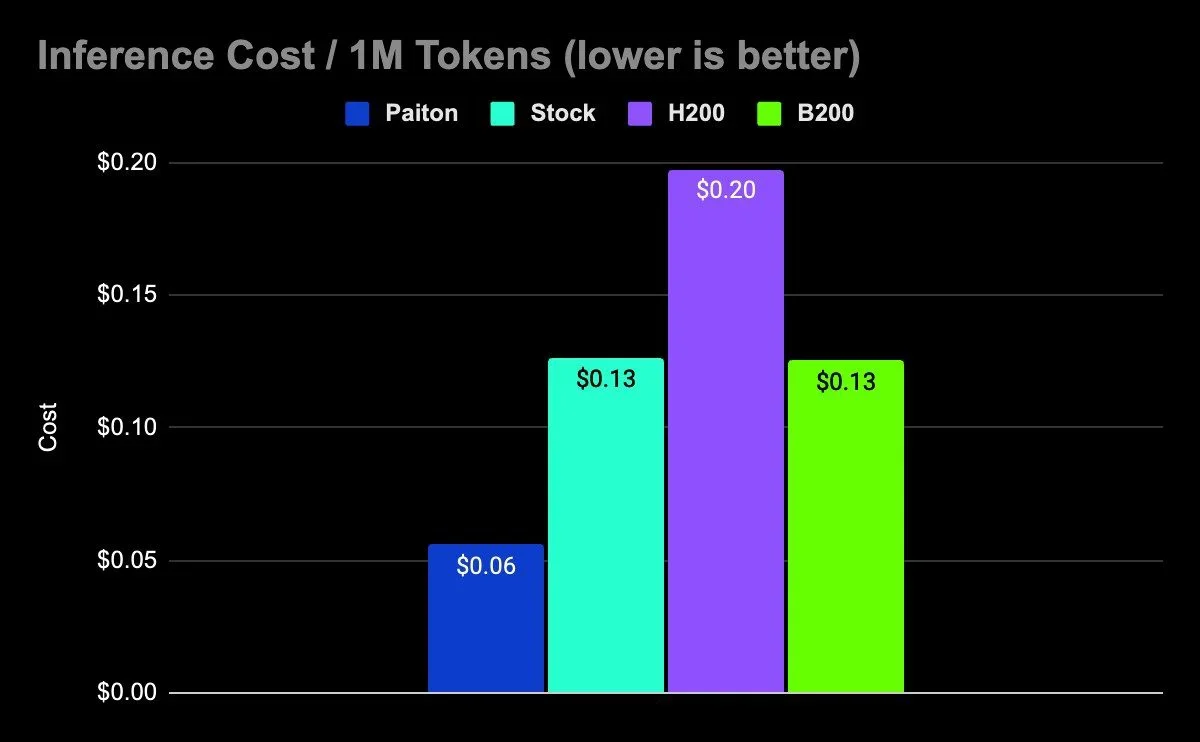

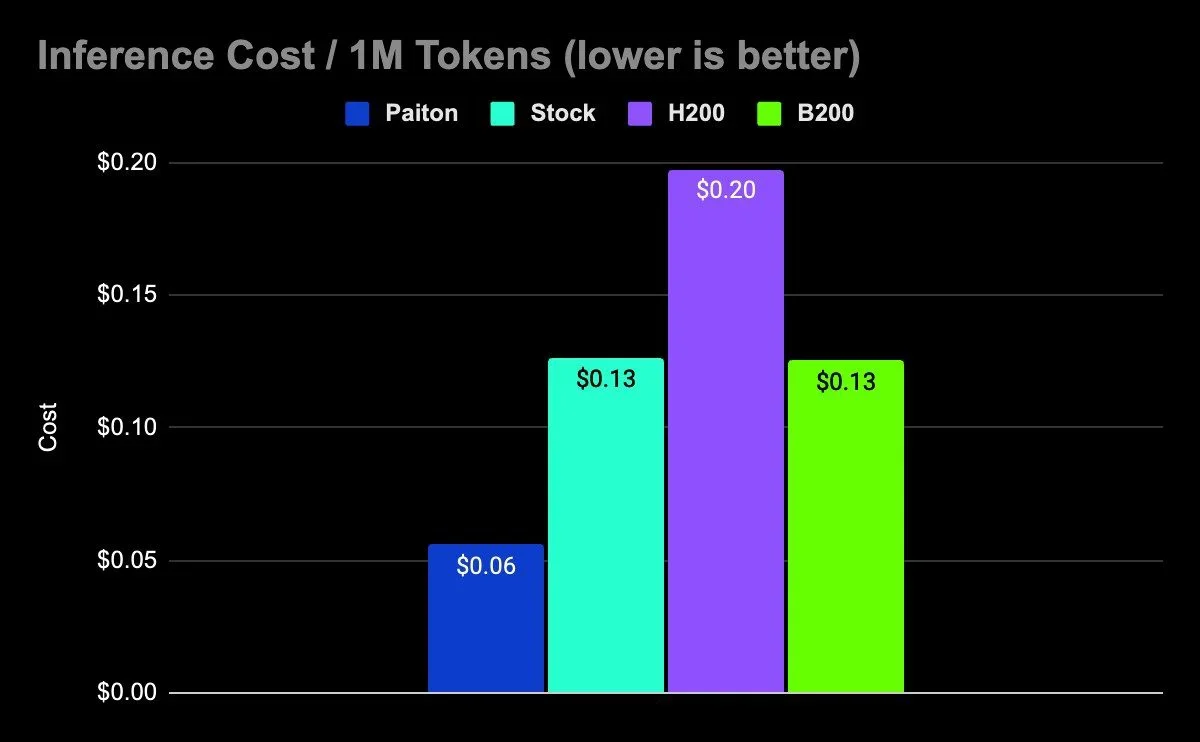

Introduction AI is rapidly transforming every industry, but running large models efficiently remains a major technical and financial challenge. At…

With Paiton, we're not merely pursuing peak inference speeds, we're fundamentally reshaping the entire lifecycle of large language model (LLM)…