Applicable AI for Businesses

Here at Eliovp, we continuously innovate when it comes to building practical solutions. If there's one core strength, it’s our…

Here at Eliovp, we continuously innovate when it comes to building practical solutions. If there's one core strength, it’s our…

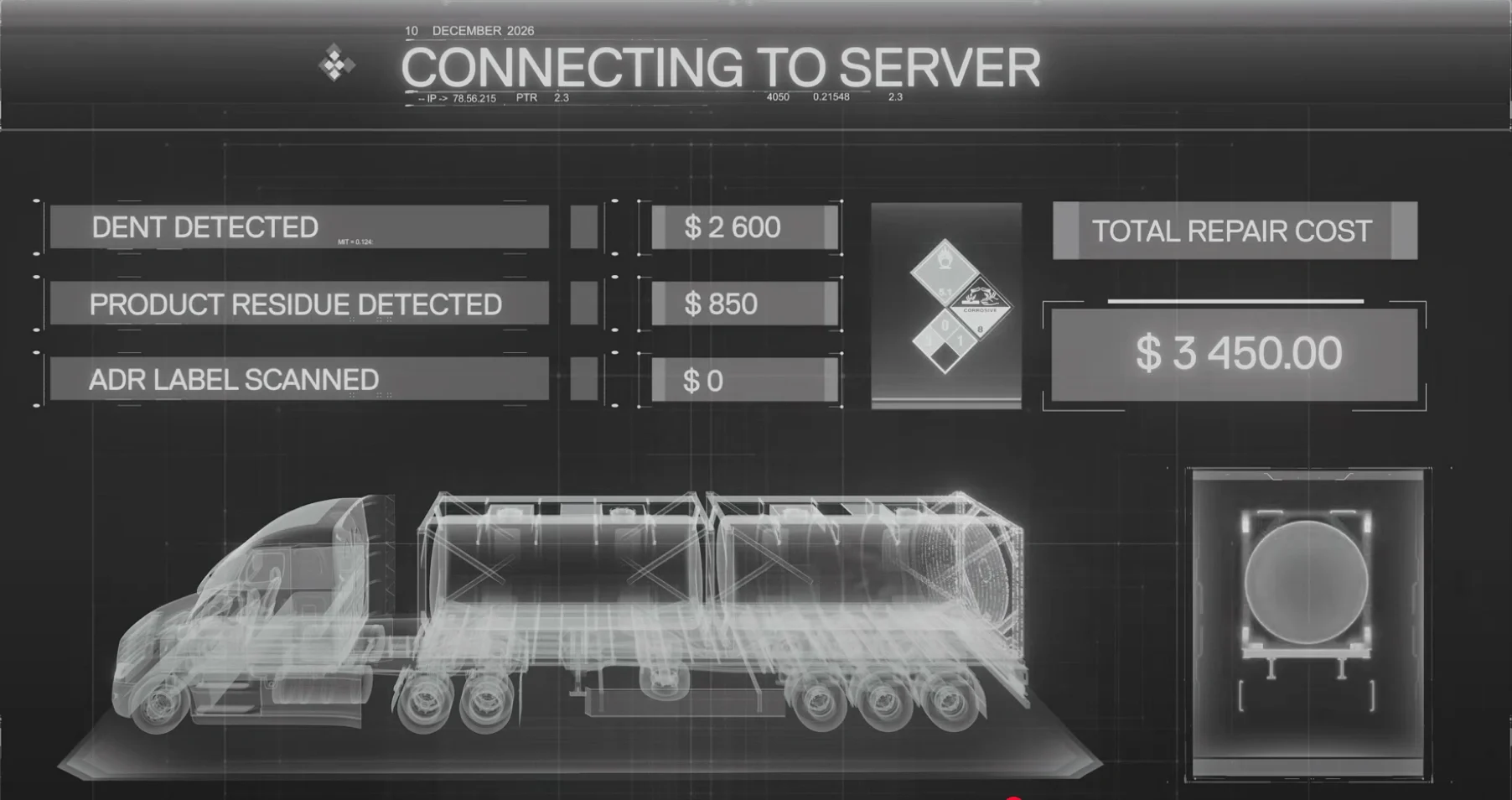

Introduction AI is rapidly transforming every industry, but running large models efficiently remains a major technical and financial challenge. At…

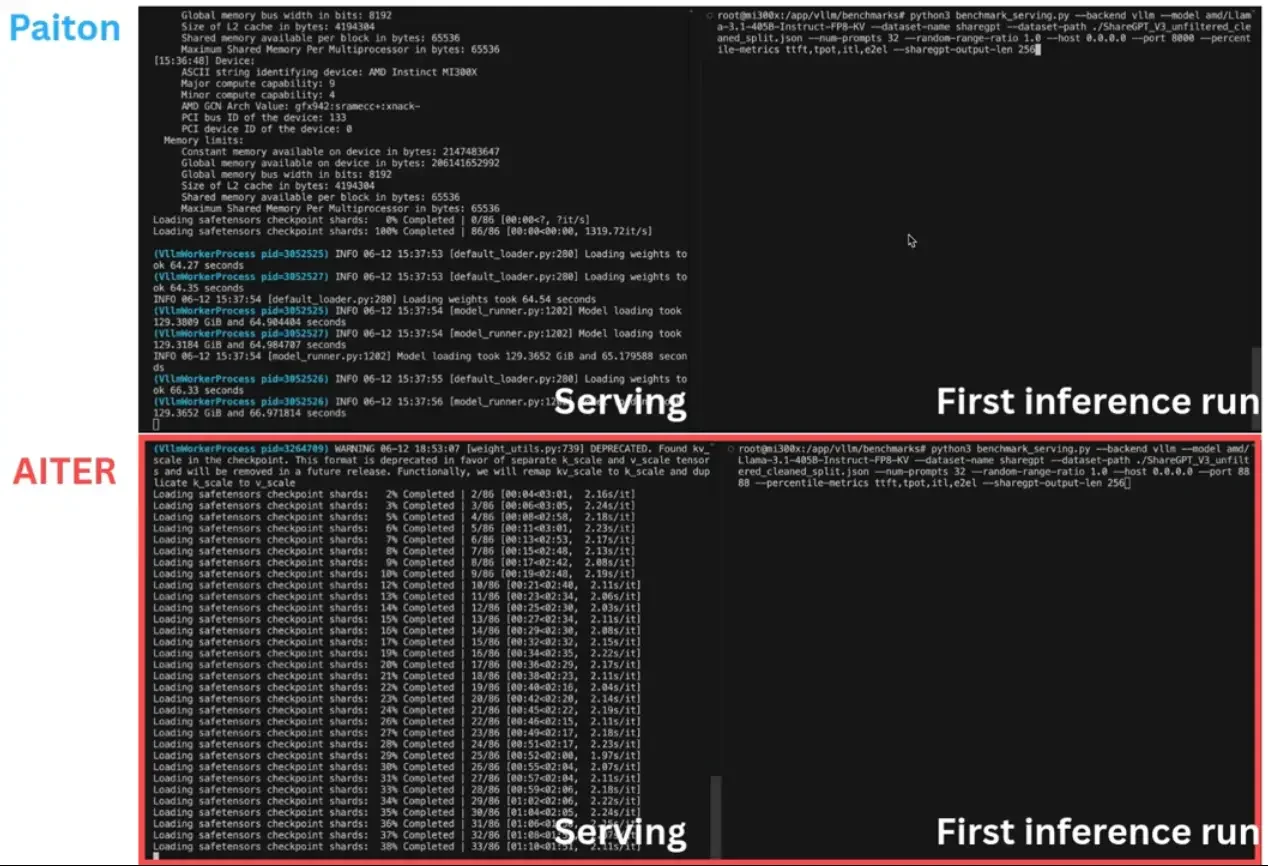

With Paiton, we're not merely pursuing peak inference speeds, we're fundamentally reshaping the entire lifecycle of large language model (LLM)…

The world of AI is moving at an unprecedented pace, and efficient inference is key to deploying powerful models in…