AI Model Optimization with Paiton

In the fast-paced world of artificial intelligence, model efficiency and performance are paramount. At ElioVP, we’re redefining what’s possible by delivering unparalleled optimization solutions for AI models with Paiton. By compiling the model’s architecture and leveraging our custom-written kernels, Paiton enables faster inference and reduced resource consumption on AMD GPUs.

Why Model Optimization is More Important Than Ever

As AI models become more sophisticated, their computational demands grow exponentially. Many organizations are hitting performance bottlenecks due to large model sizes and limited hardware efficiency. Conventional frameworks often leave untapped potential on the table, especially when dealing with high-end GPUs equipped with vast amounts of VRAM. That’s where Paiton steps in, bridging the gap between theoretical GPU power and real-world AI performance.

Our Solution: The Paiton Framework

Paiton’s unique approach addresses these challenges head-on. Here’s how:

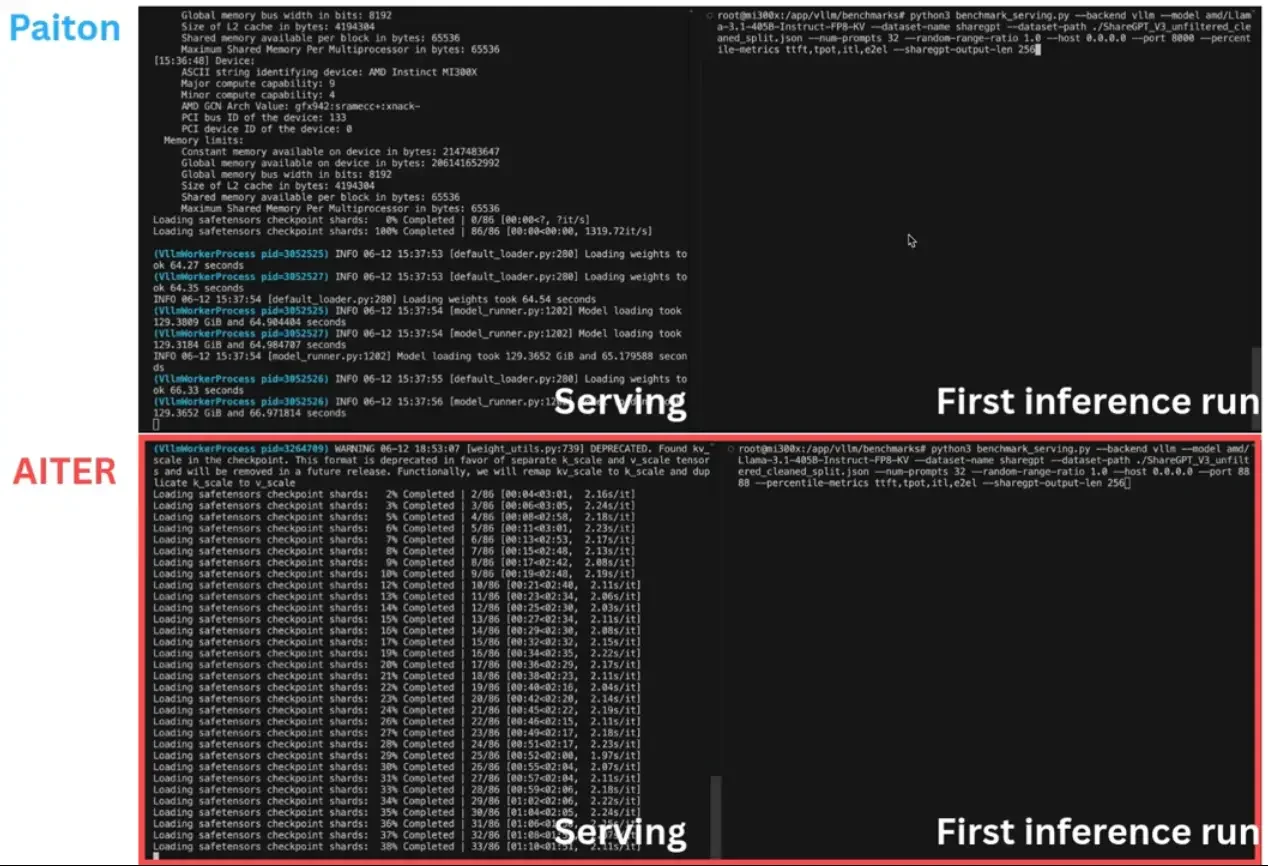

Model Compilation

We compile the AI model’s architecture into highly optimized .so libraries. This process eliminates inefficiencies inherent in general-purpose frameworks, tailoring the execution pipeline to specific hardware.

Custom Kernels

Our team has developed specialized kernels that go beyond standard libraries by tailoring them to specific AMD GPUs (Yes, we even optimize for the MI200 series, not just the MI300). These kernels are designed to maximize throughput while minimizing latency, ensuring every operation, from matrix multiplications to attention mechanisms, performs at peak efficiency.

Fused Kernels

By combining multiple operations into a single, highly efficient kernel, we reduce memory overhead and improve execution speed. These fused kernels are particularly effective for complex AI operations like multi-head attention and tensor reshaping, ensuring seamless and fast performance on AMD GPUs.

Real-World Impact: Faster Inference, Smarter AI

By integrating Paiton into your workflow, you can achieve:

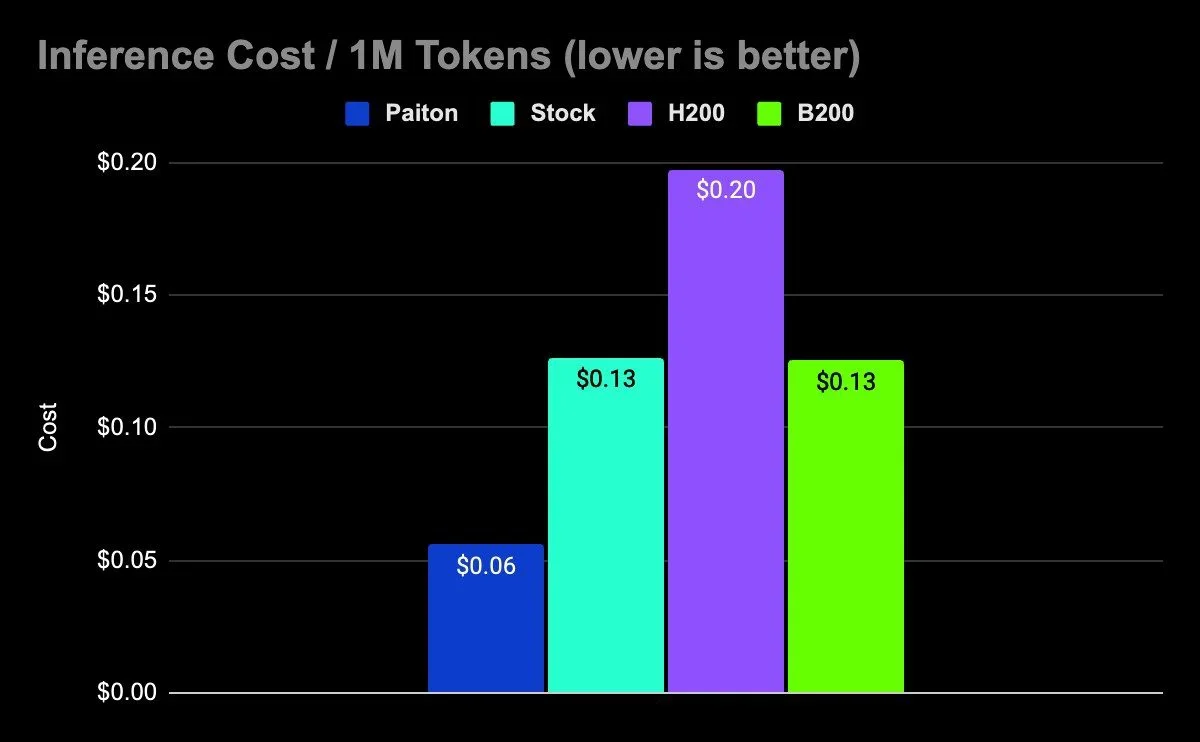

- Up to 80% Faster Online Inference Speeds: Experience a significant reduction in latency and increased throughput.

- Reduced Energy Costs: Optimized models consume less power, making them more eco-friendly and cost-effective.

- Improved Model Deployment: Simplify the process of deploying AI solutions on diverse platforms, from cloud environments to edge devices.

Why Choose Paiton?

Paiton is more than just a tool; it’s a paradigm shift in how AI models are optimized and deployed. Our expertise in compiling architectures and crafting custom kernels for AMD GPUs sets us apart, delivering results that generic frameworks simply can’t match. With Paiton, you’re embracing the future of AI model optimization.

Get Started Today

Ready to take your AI models to the next level? Contact us to learn more about Paiton and how we can help you unlock the full potential of your AI applications.

Let’s build smarter, faster, and more efficient AI together.

– The Paiton Team –