Paiton FP8 Beats NVIDIA’s H200 on AMD’s MI300X

The world of AI is moving at an unprecedented pace, and efficient inference is key to deploying powerful models in…

The world of AI is moving at an unprecedented pace, and efficient inference is key to deploying powerful models in…

As large language models (LLMs) become a foundational part of modern applications, picking the right server for deployment is more…

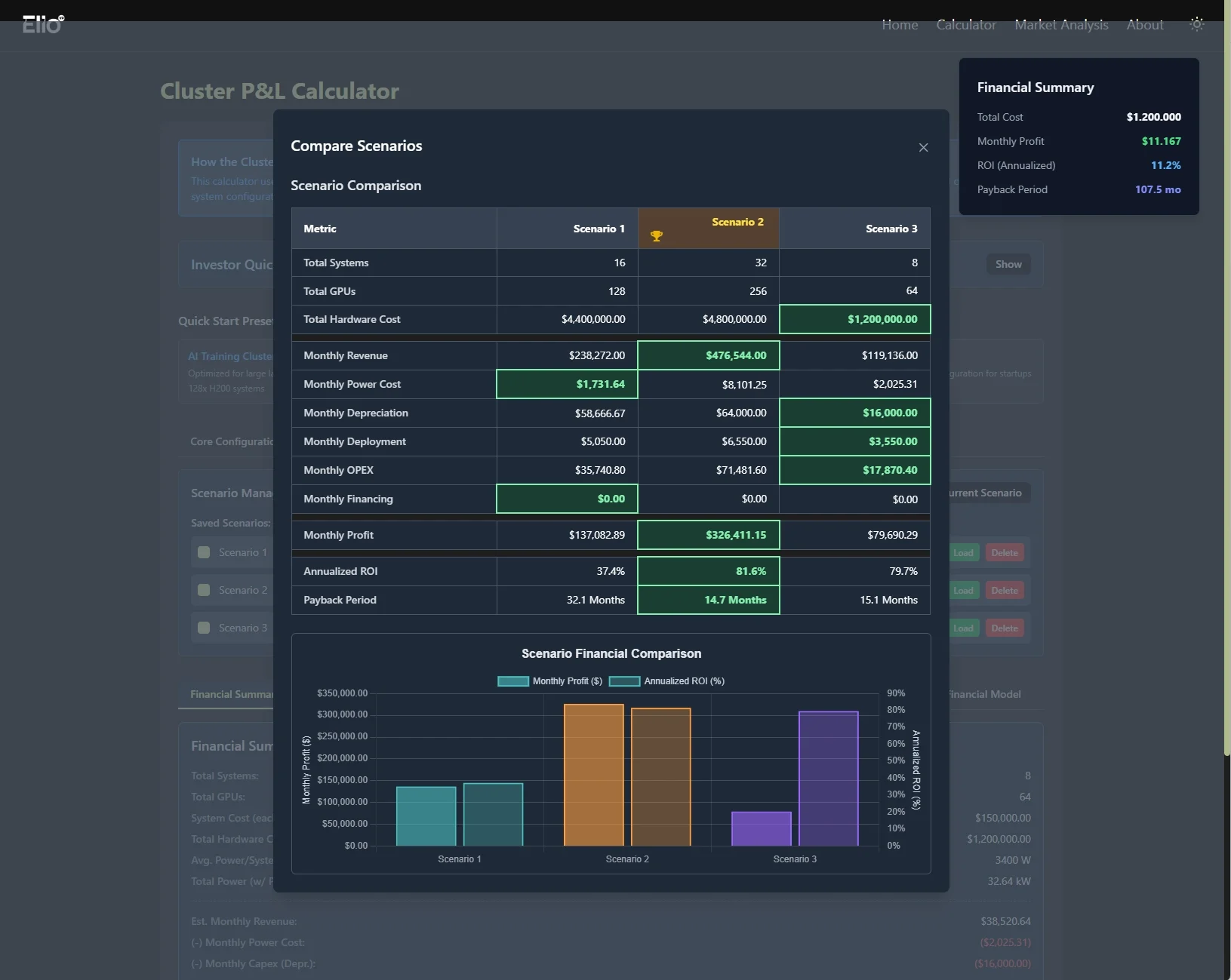

At Eliovp BV, we’ve spent years on the cutting edge of GPU cluster deployment and optimization across Europe. Our team…

Qwen3-32B on Paiton + AMD MI300x vs.NVIDIA H200 1. Introduction “While we’re actively training models for local customers, automating and…