Optimizing QwQ-32B (by Qwen): AMD MI300X vs. NVIDIA H200

1. Introduction

In the world of large language models (LLMs), most benchmarks center on Llama or DeepSeek derivatives. We decided to diversify by adding the Qwen2 architecture, using our Paiton framework. This 32-billion-parameter model pushes GPU resources to the limit, perfect for comparing NVIDIA’s new H200 to our AMD MI300X, which leverages Paiton for advanced concurrency and custom kernel compilation.

Why QwQ-32B?

- High-Parameter Model: 32B parameters can handle intricate dialogues, domain-specific knowledge, and advanced reasoning.

- Less Common: Goes beyond the typical Llama/DeepSeek solutions, letting us highlight Paiton’s flexibility in optimizing a broader range of LLMs.

- Incredible results: QwQ-32B, specifically, is showing great results compared to Deepseek-R1, GPT-4o, Claude 3.7 and other models which are relatively large.

These results can be found here: Artificial Analysis AI

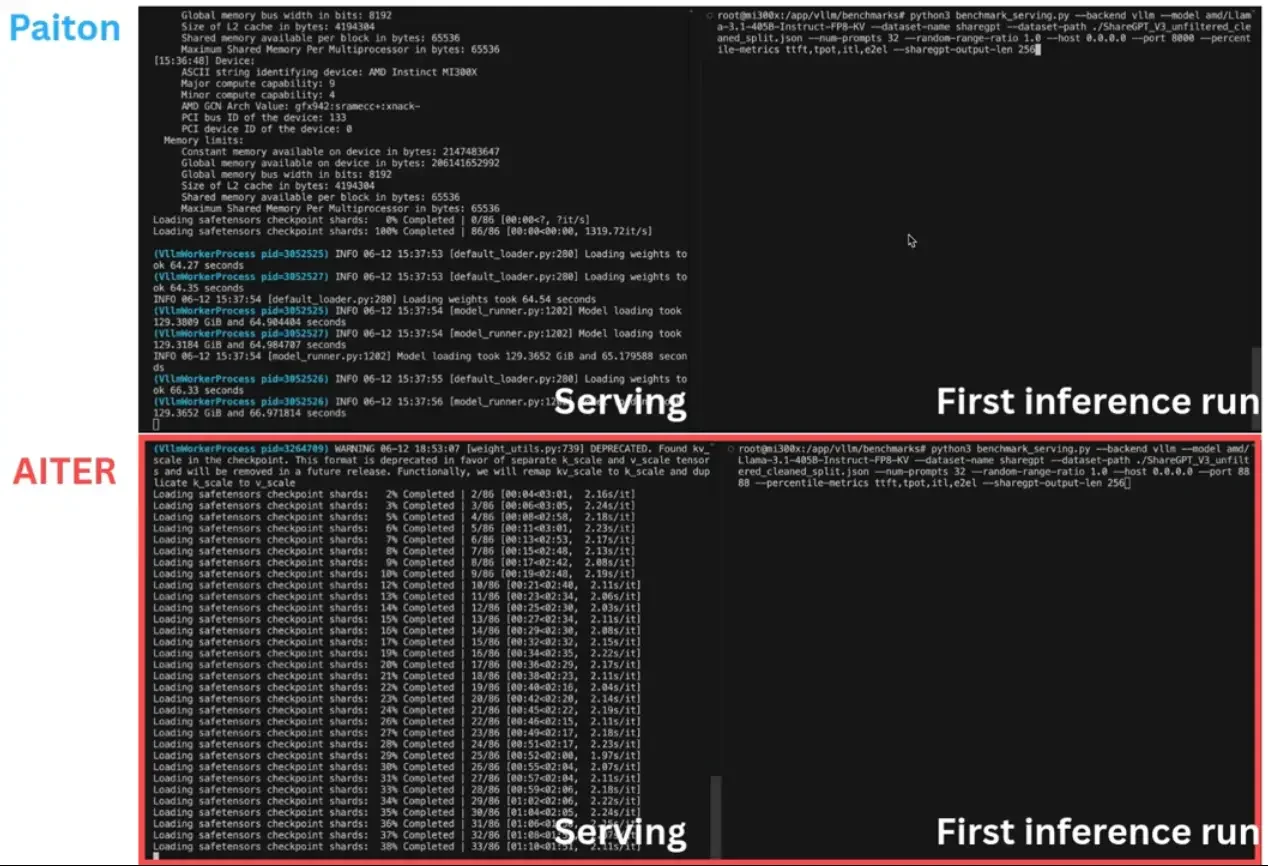

2. Paiton & vLLM Versions

Paiton on AMD MI300X

- vLLM: We used vLLM 0.7.3 for Paiton. Although newer versions (like 0.8.0) exist, our test system was configured with 0.7.3, which still benefits from many concurrency improvements.

- Flags: Standard environment, HIP-based concurrency, no hidden toggles besides Paiton’s kernel fusion.

Stock Model Runs

- NVIDIA H200: Running vLLM 0.8.0 with the best tested options:

- CUDA Graph capturing enabled (via default or explicit flags)

- “V1” concurrency scheduling (default in vLLM 0.8.0, if use-case supported)

- AMD MI300X (Stock): For direct “stock vs. Paiton” comparison, we also tested the same vLLM version as Paiton (vLLM 0.7.3) with “best” possible flags:

--num_sched_steps=10- CUDA Graph capturing (where relevant)

- Disabling or enabling certain features for optimal concurrency

We wanted to ensure each GPU had the best possible environment.

Note: On the AMD side (stock runs), we do not apply our custom kernel compilation or concurrency logic from Paiton, so we can see the native performance of the MI300X.

3. Test Setup & Methodology

Hardware

- NVIDIA H200 (single GPU)

- AMD MI300X (single GPU), tested in two modes:

- Paiton-optimized

- Stock vLLM, same version as Paiton (0.7.3), with best concurrency flags.

Model & Command

QwQ-32B served with:

vllm serve Qwen/QwQ-32B --num-scheduler-steps=10 --swap-space=16 ...

- For the H200, we used the default concurrency approach (-tp 1 or similar) plus “V1 scheduling.”

- Batch sizes tested: from 1 up to 128.

Metrics: Requests/s, Output Token Throughput, TTFT, TPOT, ITL, and E2E Latency.

4. Results: Stock Model Runs vs. Paiton

We’ve split the performance data into two main tables, one for Throughput metrics and one for Latency metrics. Afterward, you’ll see Graphs integrated into the text, each accompanied by Notes on how they illustrate the comparative performance of AMD MI300X vs. NVIDIA H200.

4.1 Throughput Tables

Stock Model: AMD MI300X

| Batch Size | Request Throughput (req/s) | Output Token Throughput (tok/s) | Total Token Throughput (tok/s) |

| 1 | 0.40 | 48.02 | 52.86 |

| 2 | 0.11 | 49.51 | 51.52 |

| 4 | 0.22 | 73.42 | 77.38 |

| 8 | 0.44 | 120.28 | 183.72 |

| 16 | 0.96 | 220.17 | 412.65 |

| 32 | 1.79 | 403.23 | 829.22 |

| 64 | 3.21 | 676.22 | 1467.65 |

| 128 | 4.94 | 1079.45 | 2226.07 |

Stock Model: NVIDIA H200

| Batch Size | Request Throughput (req/s) | Output Token Throughput (tok/s) | Total Token Throughput (tok/s) |

| 1 | 0.42 | 49.74 | 54.76 |

| 2 | 0.13 | 57.84 | 60.18 |

| 4 | 0.26 | 85.11 | 89.71 |

| 8 | 0.52 | 142.21 | 217.21 |

| 16 | 1.01 | 231.77 | 434.38 |

| 32 | 1.86 | 418.62 | 860.89 |

| 64 | 3.44 | 728.66 | 1574.56 |

| 128 | 6.00 | 1311.51 | 2704.78 |

Paiton on AMD MI300X

| Batch Size | Request Throughput (req/s) | Output Token Throughput (tok/s) | Total Token Throughput (tok/s) |

| 1 | 0.44 | 52.03 | 57.27 |

| 2 | 0.14 | 60.27 | 62.71 |

| 4 | 0.27 | 87.92 | 92.67 |

| 8 | 0.53 | 144.27 | 220.35 |

| 16 | 1.02 | 234.10 | 438.76 |

| 32 | 1.90 | 427.54 | 879.22 |

| 64 | 3.40 | 715.60 | 1552.62 |

| 128 | 5.56 | 1211.53 | 2503.30 |

Graph 1: Requests/s vs. Batch Size

(Higher is better.)

Note: Notice how at small-to-mid batch sizes (e.g., 1 to 32), Paiton on MI300X matches or exceeds the H200’s requests-per-second. As batch size grows beyond 64, the H200 still holds a slight lead, but Paiton narrows the gap compared to the “stock” AMD runs. This underscores AMD MI300X’s capability to outperform or closely match NVIDIA H200 under many concurrency conditions when optimizations (Paiton) are applied.

4.2 Latency Tables

Stock Model: AMD MI300X

Stock Model: NVIDIA H200

Paiton on AMD MI300X

Graph 2: Time-to-First-Token (TTFT) vs. Batch Size

(Lower is better)

Note: TTFT is the delay before a single token is produced. At low batch sizes, H200 is slightly ahead. However, as soon as we apply Paiton on the MI300X, the difference at small concurrency shrinks. The data also shows that once you move beyond very small batch sizes (e.g., 8 or 16), AMD + Paiton can keep TTFT in a competitive range.

Graph 3: Mean End-to-End Latency (E2E) vs. Batch Size

(Lower is better)

Note: E2E Latency accounts for the entire request: from the time the prompt is submitted to the time all tokens are generated. With Paiton optimizations, the MI300X consistently lowers E2E latency compared to stock AMD results and can rival the H200 in many batch-size scenarios.

5. Analysis & Observations

Comparison at Small Batches

- Batch=1 or 2: Paiton on MI300X and the H200 are extremely close in throughput (~0.4–0.44 req/s).

- TTFT for Paiton on MI300X is somewhat higher (61 ms vs. 44 ms on H200 at batch=1), but the overall E2EL remains in the same range (~2.29–2.48 s).

Graph 4: Requests/s vs. E2E Latency (small batches)

(Lower is better)

Note: If we specifically plot requests/s against end-to-end latency for small batch sizes (1–8), the MI300X with Paiton can not only match but, in some runs, exceed the concurrency of the H200. This is a key example of how targeted optimizations can push AMD’s hardware further.

Mid Batches (16–32)

- AMD + Paiton has a slight edge in req/s (1.9 vs. 1.79 at batch=32, for instance) over AMD Stock and is catching up to H200’s concurrency.

- TTFT sees a jump (472–928 ms range), which is typical for big LLMs, but Paiton’s concurrency approach helps keep total tokens/s high.

Graph 5: Total Throughput (tok/s) vs. Batch Size

(Higher is better)

Note: Total token throughput (input + output tokens) scales significantly with concurrency. Notice how Paiton on MI300X actually outperforms the H200 at certain mid-range batches in terms of tokens processed per second, showcasing that the MI300X architecture + Paiton’s kernel fusion can shine when carefully tuned.

High Batches (64–128)

- H200 leads (for now) at batch=128 with 6.0 req/s vs. 5.56 on Paiton, but Paiton outperforms the stock AMD MI300X at 4.94 req/s.

- E2EL can still be ~9–12 seconds at these large batches, consistent with the heavy token generation tasks for a 32B model.

Graph 6: P99 End-to-End Latency vs. Batch Size

(Lower is better)

Note: The P99 E2E Latency at very high concurrency is understandably large. Even though the H200 tops out with a slightly higher request throughput, MI300X with Paiton significantly narrows the performance gap. This points to the strong potential of further concurrency refinements, eventually surpassing NVIDIA’s performance in certain workloads.

Summary: While H200 might show a slight lead at the highest concurrency, Paiton significantly boosts the AMD MI300X performance beyond the stock approach, especially at small to mid batch sizes. This suggests real-world AI clusters could see better scaling using Paiton if concurrency or batch scheduling is carefully tuned.

6. Emphasizing AMD’s Strength & Ongoing Improvements

- HBM Advantage: The MI300X’s large HBM memory ensures stable performance across a wide token range; even at bigger batch sizes, the system remains stable.

- Kernel Compilation: Paiton’s custom approach yields better concurrency, especially for smaller batch operations, bridging typical overhead.

- Not “There” Yet: In some highest-batch cases, the H200 retains an edge, but we’re actively refining Paiton’s kernel launching and concurrency strategies to close that gap.

QwQ-32B was less commonly benchmarked, so supporting it within Paiton showcases our library’s model-agnostic design. Expect further gains as we adopt or adapt to vLLM 0.8.x concurrency improvements on AMD.

7. Next Steps & Future Work

- Tensor Parallelism: Evaluating multi-GPU scaling for QwQ-32B, especially if AI clusters want sub-2 second latencies.

- Advanced Quantization: 8-bit or 4-bit compression to reduce memory footprint and further speed up inference.

- Further Kernel Optimizations: Utilize our current and new optimization techniques to push the hardware even further.

- RAG: How Paiton improves Retrieval Augmented Generation.

8. Conclusion

QwQ-32B offers a fresh perspective on large-scale inference beyond common Llama-based benchmarks. Our results show:

- Paiton optimizing the AMD MI300X to significantly outperform “stock” AMD runs.

- Competitive or better performance at small to mid batch sizes vs. NVIDIA’s H200.

- Room to improve further at the larger batch sizes to beat the H200, which we’ll address with advanced concurrency.

Stay tuned for more updates on further integration and optimizations, cluster concurrency testing, and new optimization breakthroughs for AMD-based solutions. If you have any questions or want to see specific tests, reach out, we’re committed to refining Paiton for the widest range of models and hardware.

Here we’ve demonstrated how crucial concurrency and kernel-level optimizations are in closing (and often exceeding) the performance gap with NVIDIA’s latest hardware. With Paiton, the AMD MI3** series is destined to become a top choice for high-throughput, large-model inference.

Thank you for reading!

– The Paiton Team –