Paiton: Dramatically Faster Startup and Performance for Llama-3.1-405B

With Paiton, we’re not merely pursuing peak inference speeds, we’re fundamentally reshaping the entire lifecycle of large language model (LLM) deployment. Our latest endeavor pairs AMD’s cutting-edge MI300X GPUs with the colossal Llama-3.1-405B-Instruct-FP8-KV model, achieving groundbreaking milestones:

- Instant-On Startup: Significantly reducing cold-start delays for massive LLM deployments.

- Advanced Tensor Parallelism (TP): Dramatically enhancing inference throughput and slashing latency through sophisticated TP optimizations.

Visual Demonstration: Startup Speed Showcase

We’re excited to share a visual demonstration of Paiton’s revolutionary startup performance. Watch below how Paiton transforms a typically sluggish startup process into an agile, responsive experience. After startup we showcase the first inference run as well to show you the whole picture, from startup to first request.

Benchmarking Testbed & Methodology

Ensuring transparency and reproducibility, our benchmarking approach includes detailed specifications:

- Inference Library: vLLM v0.9.0 with amd/Llama-3.1-405B-Instruct-FP8-KV

- Hardware: 8 × AMD MI300X GPUs (192 GB total HBM3 memory)

- Software Stack: ROCm 6.3.1 on Ubuntu 22.04 (notably still utilizing an older driver stack)

- Batch Size: 32, representative of realistic, interactive AI workloads

- Measurements: Comprehensive averages over 10 runs, meticulously covering startup times, cold-start and steady-state TTFT, and end-to-end latency metrics

Key Highlight: Paiton consistently delivers stable and reliable performance, eliminating variability common in other inference solutions.

LLM Startup: The Critical Bottleneck

Deploying large-scale LLMs like Llama-3.1-405B-Instruct-FP8-KV is a significant engineering challenge. Startup delays commonly arise from:

- Model Weight Loading: Transferring massive sets of parameters from storage to GPU memory.

- Graph Compilation: Transforming high-level model definitions into optimized execution plans.

- Initial Warm-up: Performing preliminary inferences to reach peak operational efficiency.

These delays directly impact scalability, developer productivity, operational cost-efficiency, and end-user experience.

Instant-On Startup: Paiton’s Strategic Advantage

Paiton uniquely harnesses AMD’s GPU architecture combined with proprietary optimizations to substantially reduce startup times:

- Hyper-Optimized Weight Loading: Leveraging AMD’s ultra-fast HBM3 memory with parallel data transfers.

- Accelerated Graph Compilation: Custom routines that entirely eliminate compilation wait times.

- Intelligent Warm-up: Advanced priming strategies guaranteeing immediate and sustained responsiveness.

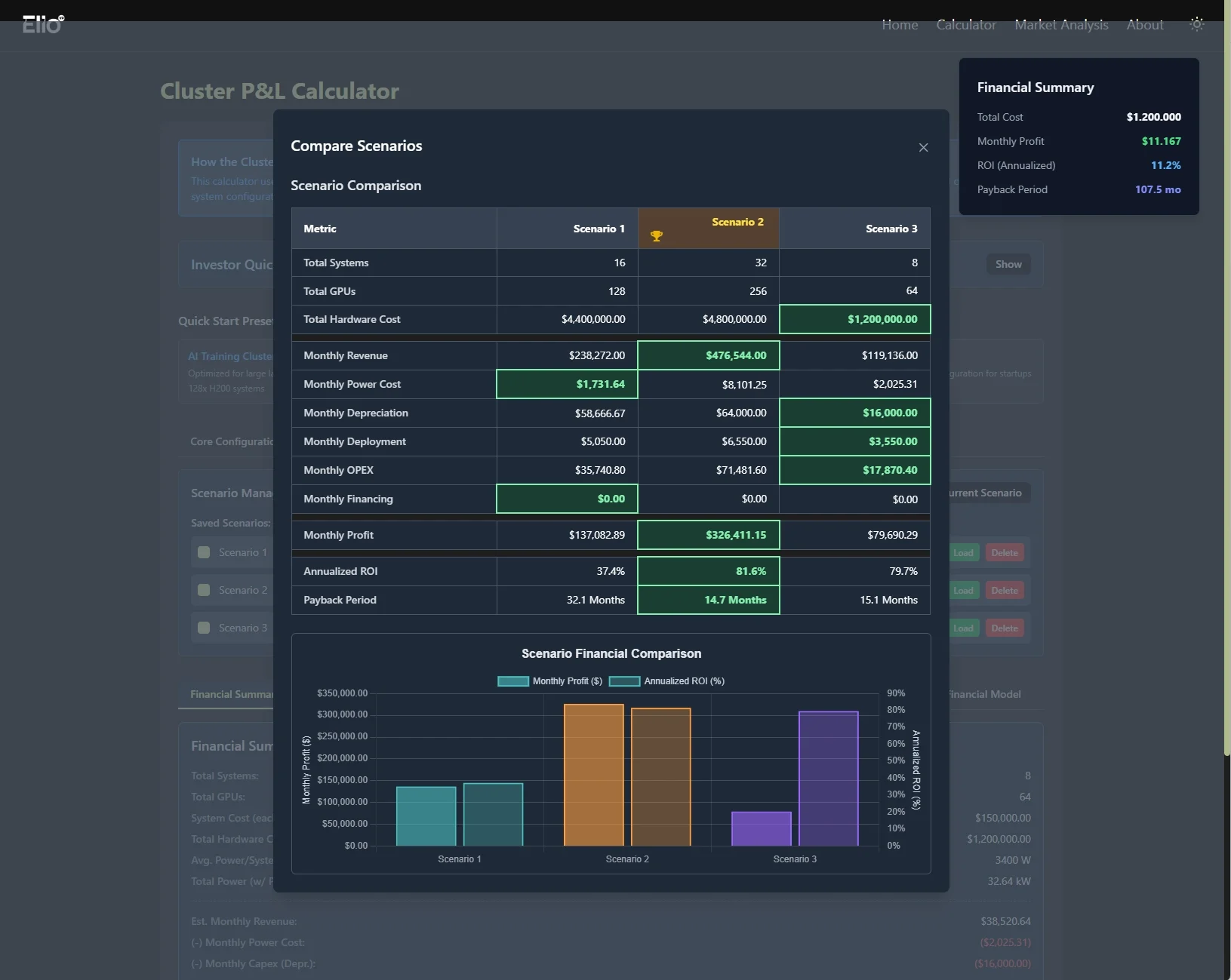

Startup Comparison: Llama-3.1-405B-Instruct-FP8-KV

| Stage | Standard vLLM (sec) | AMD + Paiton (sec) | Improvement |

| Model Weight Load | 71.24 | 64.28 | 9.7% Faster |

| Memory Profiling | 69.97 | 38.63 | 44.8% Faster |

| Graph Compilation | 27 | 0.00 | 100% Faster |

| Initial Warm-up | 98.37 | 40.59 | 58.7% Faster |

| Total Startup | 266.58 | 143.50 | 46.2% Faster |

Real-world Impact: Deploy a fully operational 405B parameter LLM in less than 2.4 minutes, significantly outperforming traditional deployment methods.

Deep Dive into Tensor Parallelism: The Paiton Edge

Tensor Parallelism is vital for harnessing the power of multi-GPU configurations. At Paiton, we’ve invested extensive effort in deeply optimized kernel development and an enhanced communication layer specifically tailored for AMD’s MI300X GPUs. Our proprietary approach to TP provides unparalleled performance:

- Highly Optimized Kernels: Precision-crafted to maximize GPU compute efficiency and reduce intra-node latency.

- Advanced Communication Layer: Significantly streamlined inter-GPU communication, drastically reducing overhead.

- Scalable Architecture: Consistent, predictable scaling even in complex multi-GPU deployments.

Sustained Performance Gains with Paiton’s TP

| Metric | Paiton Avg | Improvement vs Aiter |

| Request Throughput (req/s) | 2.35 | +20.5% Faster |

| Output Token Throughput (tok/s) | 462.52 | +13.4% Faster |

| Total Token Throughput (tok/s) | 1009.91 | +17.3% Faster |

| Mean TTFT (ms) | 2581.94 | 42.8% Faster |

| Mean E2EL (ms) | 11051.53 | 24.3% Faster |

| Mean ITL (ms) | 43.28 | 10.6% Faster |

Paiton’s combination of rapid deployment capabilities and superior runtime performance uniquely positions AMD-based infrastructure for enterprise-grade AI deployments.

Shaping the Future of LLM Deployment

Our significant breakthroughs with Llama-3.1-405B-Instruct-FP8-KV represent a transformative shift, providing unprecedented agility, efficiency, and scalability for deploying large-scale AI workloads.

Transparency: Our Commitment to Authenticity

At Paiton, we pride ourselves on real, measurable results. Our journey is distinct:

- Lean & Independent: Just 3 engineers, entirely self-funded with zero external investments or support.

- Self-Reliance: No financial, technical, or promotional assistance from AMD or other external entities; fully self-financed investment in our MI300X hardware.

- Proven Expertise: Previously shipped over 250,000 GPUs, thousands of AMD EPYC CPUs, and numerous AI servers, successfully spinning up large-scale AI clusters worldwide.

- Original Innovation: Unlike many startups leveraging open-source software or superficial wrappers, we build everything, including deep kernel optimizations, from scratch.

- Direct Message to AMD: While AMD’s Aiter library is commendable, our compact team achieves consistently superior performance, demonstrating efficiency and innovation unmatched even by larger, funded teams.

- Results Over Hype: We never shout before we deliver. Unlike others that secure millions in funding with limited tangible outcomes, we achieve groundbreaking results first and let those results speak for themselves.

Together, let’s redefine what’s achievable with cutting-edge AI technology and AMD hardware.